Metadata descriptions. Can AI write them for you?

TL;DR – No

The future is metadata

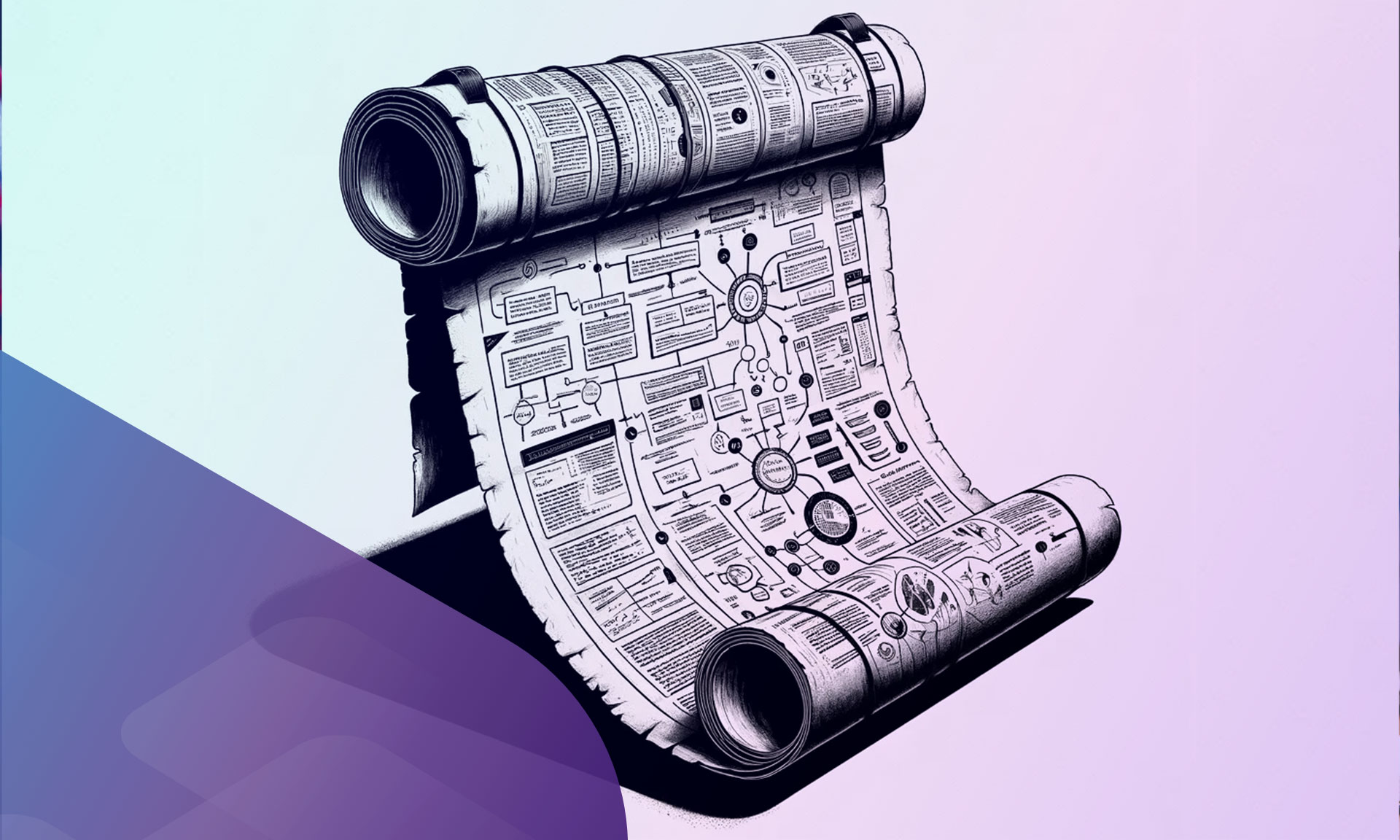

Documenting your org and metadata changes is valuable: process maps, architecture diagrams, ERD, and metadata descriptions. But it is only valuable the next time that you go back to that metadata item to make a change. It is “delayed gratification” or as Kristi Campbell MVP put it “it’s a gift to your future self”. So it is easy to skip the documentation in the rush to release. And you never have time after implementation to update the documentation. There is a lot more documentation than just descriptions that you need to manage your org.

Metadata and documentation is now being talked about in Salesforce keynotes and Marc is talking about metadata on investor earnings calls. This is because metadata is seen as a competitive differentiator, particularly as it makes the integration of the core platform with Data Cloud and Einstein possible without code. And the reason metadata descriptions are now being talked about is because Einstein Copilot uses them to make decisions.

So, can AI help us write descriptions and how do we get started?

Copilot understands your business. You’ve taught Salesforce your business with metadata – with custom fields. It knows that there is a “High Value” custom field. It interpreted that.

John Kucera, SVP Product Management, Salesforce

@TDX24 Keynote

Einstein Copilot reads metadata descriptions

I encourage you to watch the TDX24 Keynote where they give an overview of the Einstein 1 Platform, how it is integrated into Data Cloud, and how AI can take action. It is available on Salesforce+. This demo is also being presented at the World Tours.

With the launch of Einstein 1 and the powerful AI capabilities, there is suddenly a more pressing reason for filling out descriptions. Einstein1 Copilot uses descriptions for the core platform and Data Cloud metadata so that it can perform its magic. In the compelling demos at TDX and World Tours, you can see that Copilot is being prompted with just a few sentences and it is making sense of the request. It is going out and looking for fields with the data it needs. You are now telling it where to look. It is also looking for the Flows or Apex that it can run to take action and chain those actions together.

For all of those of you who have been fastidiously documenting…. You are done. And for the slackers out there, you have another reason to add descriptions. You give a description to an action, just like you would introduce it to a colleague. That teaches Einstein. Let’s give it up for documentation. I love it.

John Kucera, SVP Product Management, Salesforce

@TDX Keynote

Will Einstein Copilot be confused, or disappointed?

If there are no descriptions Einstein Copilot is going to have to rely on field labels and API names. Let’s hope that you are using the standard objects and fields as they were intended and that you had strong naming conventions for custom objects and custom fields. If not, you could be getting some very inaccurate results.

Now there is a huge incentive to go back and document the important metadata. To do that you need to prioritize the work as it is impossible and unrealistic to document your entire org. We’ll talk a little later about how you select the use cases for AI so that you can work out what is important metadata to document. You also need to streamline the creation of that documentation with another audience in mind: AI (Einstein Copilot and 3rd party apps like ElementsGPT).

Einstein Copilot is everywhere and the reason it can do that is because it’s reading all the data and metadata to give you instant insights.

Jon Moore, Director Product Marketing, Salesforce

@Washington DC World Tour

Metadata descriptions

If you are going to start documenting, don’t limit yourself to just what Einstein needs. Good documentation accelerates time to value for future changes. It reduces the time to do impact analysis and helps you identify metadata that can be removed to clean up technical debt. It can be read by AI like ElementsGPT to help you architect and design better solutions.

Whilst there is more documentation required than descriptions, let’s start there. Let’s look at what metadata can be documented using the descriptions. However, there are several challenges with this approach. Not all metadata items have descriptions, and for those that do, some have a character limit so you need to be very succinct. And finally, if AI is reading this information you need to make sure that it is able to understand what we’ve written.

AI doesn’t understand your organization-specific acronyms and abbreviations. It is not good at making assumptions. It was not there when you made architectural or design decisions. So it doesn’t understand why. We wrote a standard called MDD (Metadata Description Definition) to help you understand how to structure your descriptions. This is summarized later in this article.

Einstein 1 metadata and description fields

Below is a table with the most commonly used metadata and the availability and size of the description field.

| Metadata | Description | Length |

|---|---|---|

| Standard Object | Not editable | |

| Standard Field | Not editable | |

| Custom Object | Yes | 998 characters |

| Custom Field | Yes, but not the name field | 998 characters |

| Page Layout | No description | |

| Lightning Pages | Yes | 255 characters, which is not enough if you have complex dynamic forms |

| List View | No description | |

| Actions | Yes | 255 characters |

| Record Types | Yes | 255 characters |

| Apex | No, but you can comment code | |

| Process Builder Workflows | Yes | No limit, but you can only see the first 50 characters in the listing and 1020 characters are visible if you roll-over the description field. |

| Flows | Yes for summary and each element | No limit, but to be able to see the description in the Flow canvas, it is best to keep it to less than 1000 chanratcers. If you edit the element you can scroll through the description. |

| Data Cloud Sources | No description | |

| Data Cloud DLO Objects | Description | |

| Data Cloud DLO FIelds | No description | |

| Data Cloud DMO Objects | No description | |

| Data Cloud DMO Fields | No description |

Standard descriptions

Did you know that there is a Salesforce site that lists the description of most (but not all) the standard fields? There is also a Salesforce site for the Data Cloud DMO objects and field descriptions. Sadly most of these are what rather than why. This is to be expected as the authors of this content don’t know how or why you are going to use the fields.

There are some classic descriptions from those sites that add no value at all. For example:

- Metadata item: Account object, AnnualRevenue

- Description: Estimated annual revenue of the account.

Here is our suggestion for a better description: The revenue for the account is the sum of all closed opportunities (new and renewal), year to date. It is updated when the opportunity stage changes. It is reduced if a credit note is issued. It is reported in a dashboard used by execs for their monthly financial review called MonthlyExecDashboard.

But only someone with knowledge of how the field is used could write this description. Could AI write it?

AI-generated documentation

Maybe AI can help? There are several ISVs claiming that their AI capabilities can document your entire org. It seems too good to be true. As always, it is not quite as simple as it sounds. So can AI document your org for you? Yes, and no. Let’s dig into what you need vs what is available.

Automation and code descriptions

For Apex, AI could look at the code and interpret it. It could then add a summary description as a comment block. For Flow, AI could look at the elements, work out what they are doing, and update the descriptions with a summary. It could even update each of the elements with descriptions of what it is doing.

This is valuable, but it doesn’t answer why it was created. And of course, you need to check that AI has correctly interpreted what the Apex or Flow is doing. If you use AI to create descriptions for every Apex and every Flow in your org, you will have a massive job going through and validating all the descriptions. You need a better approach, which we cover later.

Objects and field descriptions

AI can only describe what the metadata item is doing. This is helpful for automations which can get complex, particularly those that were built some time ago. But AI cannot add a huge amount of value for other object-related metadata. What you really need to know for metadata such as a custom object (inc DLO and DMO), field, validation rule, record type, page layout, or Lightning page is why it was created. What is the background and what decisions were made?

Example 1: NDA checkbox

- NDA_signed__c is a checkbox field on an Account.

AI came up with this description, which is correct but not very helpful:

This checkbox field indicates whether a non-disclosure agreement (NDA) has been signed by the account. When checked, it confirms that a valid NDA is in place between the organization and the account. This field is essential for tracking compliance and ensuring that sensitive information is legally protected under the terms of the NDA.”

A better description is below, but AI could never know that intent and the implied dependencies between fields. I created this because I understood the reason for the field.

The field determines if there is a non-disclosure agreement (NDA) in place that defines what can be disclosed by marketing, sales, and customer success to prospects, customers, and partners. The link to the signed NDA document is stored in the field NDA_doc__c. The NDA field is controlled by legal. The end date of the NDA or next review date is stored in NDA_date__c. This is part of the Compliance App.

Example 2: High Value

Let’s look at another example, from the TDX24 demo. This is my interpretation as I don’t have detailed knowledge of the org that was configured for the demo. The demo shows Einstein Copilot summarizing the contact engagement and sorting through to find the high-value ones checking in today. It is here in the TDX24 recording on Salesforce+.

To be able to answer “how many high value customers are checking in today” it needs to know what the criteria are for a high-value customer; so, presumably a field on the Contact. It needs to know who is checking in today, which is in the reservations systems; this is an external system, but the data is visible through Data Cloud.

So Einstein needs a description of the high value field on Contact (High_Value__c) and the description on the field that is pulling data from the reservations system (Check_In__dml – a Data Model field). Now these fields seem fairly self-explanatory.

We asked AI for a description for High_Value__c. I am not sure from the TDX24 demo whether this field is a checkbox, text field, or dollar amount. AI assumes it was a checkbox, and this is the description it generated.

The ‘High Value’ checkbox field on the Contact record is used to identify contacts who are considered high value to the organization. When checked, this field signifies that the contact plays a significant role in business operations, either through substantial revenue generation, strategic influence, or other critical contributions. This classification helps in prioritizing customer relationship management efforts and allocating resources effectively.

This is perfect for Copilot to be able to pull the list. But the descriptions are also for the Salesforce team to understand the impact of changes in the business. How do they know what the rules are to check this field? And how are they dealing with high-value customers differently? What processes are triggered manually or automatically if this box is checked? Again, this can only be known by the business when they designed the process.

Example 2: Check-In Date

We also asked AI to write the description for the reservations system Check-in Date field (Check_In__dlm). This was the Data Model field that was linked to the external system using Data Cloud. This was via a Data Source field that was linked to the DLO field, that was linked to the DMO field. BTW I am not sure where you would store this so Einstein Copilot can read it, as DMO fields do not have descriptions. Nevertheless, let’s continue. Here is the description AI generated:

This field records the date when a guest is scheduled to check in as part of a reservation. It is essential for managing bookings, planning occupancy, and coordinating services in the reservations app. The Check-in Date helps staff prepare for guest arrivals, ensuring that accommodations are ready and any special requests are addressed in a timely manner. This field should be accurately populated at the time of reservation confirmation. It is crucial for operational scheduling, such as cleaning and maintenance, and should be used in coordination with the Check-out Date to manage room availability and turnover. Ensure that the field is included in reports that assist in daily operations and occupancy forecasting.

That is great, except this field is only completed when the guest actually checks in at the resort. But there is another field called CheckIn__dlm, which is the scheduled check-in date. There are two different fields so that guests can be charged, from when they booked to stay. CheckIn__dlm, and the other field Check_In__dlm, are used to know that they are at the resort for scheduling, compliance, and health and safety.

How could AI even know this? This is business logic inside the reservations system, which is outside the Einstein 1 Platform and is made even more confusing because I used poor naming of the DMO fields (to simulate reality). One consideration with Data Cloud is that once configurations are set up, they are very, very difficult to edit. All the advice from Salesforce is to spend 80% of the time planning and 20% building. So part of that planning is naming conventions and your documentation strategy. So AI doesn’t look like it can really help us create field descriptions.

AI cannot document your entire org

As you can see from a couple of simple, but representative examples, AI can support the creation of some documentation. But the ISV claims of “Our AI can document your org” should be treated with a huge level of skepticism. You also need to question the value of the documentation that it is able to create. And it could simply create a ton of busy work checking AI-generated descriptions for metadata that is not used.

“Metadata descriptions. Can AI write them for you?” No. That answers the question But if you want to understand how to document your org, read on.

Streamlined approach

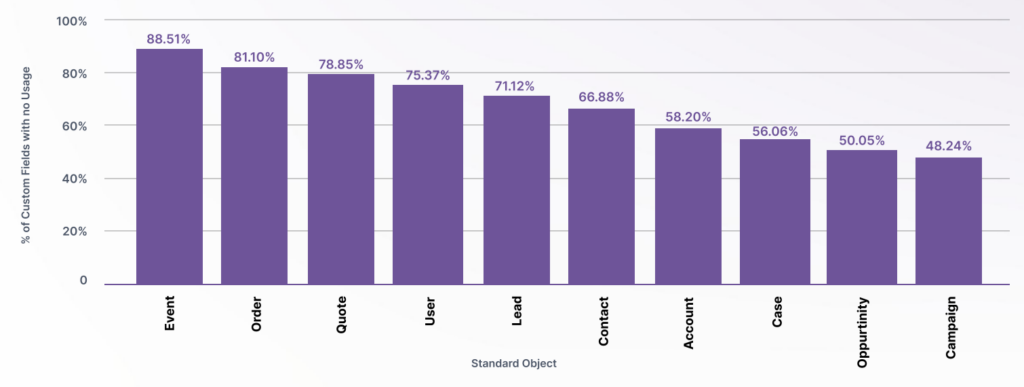

There is still a huge need to document your org. And as AI cannot do it for you we need a streamlined, efficient approach. This could be a monumental task as you probably have 10,000s of metadata items. And a lot of it is wasted effort. Our recent research report has shown that on average 40% of all custom fields are never used. And if you look at the 10 core objects it climbs to 88%. It was a huge waste of time creating these fields. Let’s not waste more time documenting them!

Business case to change implementation approach

Most orgs have no documentation because there is insufficient time during the implementation. And, there is often no time allowed after go-live to document the changes. Now you have a great catalyst – the promise (or threat) of AI – that you can use to kick-start the documentation efforts. This is also a chance to change your implementation process.

Much of the documentation that you need is created during the planning or business analysis phase of the project. For many organizations, this is not done rigorously enough in the rush to start building. You can use Data Cloud and AI as the reason to reengineer your implementation lifecycle to include enough business analysis. Whilst this will not fix your current problem – no documentation – it will make sure that every future project is building up a body of org knowledge that will increase over time.

You will see immediate benefits from getting your documentation up to date even before you implement AI. Also, it may seem that the project will take longer, but you will get a better result and accelerate overall time to value. The benefits are overwhelming:

- Reduced rework – build the right thing, first time

- Increase business trust – build what they need, not what they want

- Reduced technical debt – build what is required

- Faster impact analysis – based on better documentation

- Better Data Cloud + AI results – faster, more accurate implementation

As you’ve seen, simply relying on the description field is not enough. You need a metadata dictionary so you have somewhere to connect all the documentation for your metadata. This will support change impact analysis and activities like tech debt reduction. It will accelerate your projects.

BTW If you do not implement more systematic planning, then you will NEVER be able to deliver a Data Cloud implementation. Our experience, and the guidance given by Salesforce is Data Cloud is 80% planning and 20% execution. You cannot iterate to the right config for Data Cloud. If you get it wrong, you have to start again. Planning and documenting what you are planning and what you built, is critical.

Here is Part 1 of a 4-part ebook series on Data Cloud Implementation Best Practice. Part 1 talks about the planning phase and the documentation required to stay on top of the implementation.

Writing so AI understands (MDD)

Now that AI is reading the descriptions, you need to assume every word is taken literally. This means that it needs to be written using very simple, clear English. There are some guidelines from “Plain English” who were established in 1979 to help simplify the way we write. As they say “Plain language is clear, concise, organized, and appropriate for the intended audience.”

5 tips for better descriptions

There are 10 principles, and here are the 5 that are directly relevant:

- Write in an active voice. Use the passive voice only in rare cases.

- Use short sentences as much as possible.

- Use simple everyday words. Don’t use complex words to try and sound clever. Here is an A-Z of alternative words. For example, an alternative to ‘as a consequence of,’ is ‘because.’

- Omit unneeded words.

- Keep the subject and verb close together.

Nuances and euphemisms

Avoid nuances or euphemisms. Often we hide bad news with the passive voice and alternative words. “You will find that the case object status field is no longer activated due to automation requirements.“ A better way of expressing this might be: “We do not track case status using this field. We have a custom field so that we can use it in Flow to drive the automation based on the picklist value.”

Standard TLAs

Every industry is full of TLAs, abbreviations and jargon. We are not suggesting that you can’t use these terms. But they must be understood by AI, that has been trained on publicly available content. So don’t use acronyms that you have made up or that are very specific to your company. For example Commission is a standard term. Comms is used in telecommunications or PR, and is not the accepted abbreviation of Commission. RevOps is understood, whilst RVOps is not.

Assumed knowledge

You need to make sure that there is no assumed knowledge when writing the descriptions. An example of assumed knowledge is that you have a custom CPQ app but you reused the standard quote object. This is clearly a very obvious example. But there are smaller examples that cause just as much damage. Have you chosen not to use a standard field, but have created an almost identical custom field? The critical information is why you made this decision, but also documenting in the standard field, that it is not used.

App: capability or ownership

It is valuable to know that a metadata item supports a capability – finance, commissions, recruiting – or is part of an app – CPQ, RevOps. This may be a logical grouping or part of the way you have architected the changes – 2GP.

Metadata documentation is more than descriptions

There is a ton of other valuable documentation about a metadata item that needs to be stored so that we can understand the impact of making changes and AI can use it in the future. Clearly, this cannot be in the description field – if there is one – which is another reason why every org needs a metadata dictionary. Here is some of the documentation that is related to metadata:

- Ownership: named users

- Dependencies: other metadata inside and outside the Einstein 1 Platform

- Usage: The last time it was used, populated, or run, augmented by Event Monitoring

- Business context: business processes

- Solution design: architecture

- Business change history: requirements, and user stories

- Change impact: calculated risk of making changes

- Change log: tracking changes

- Compliance: Data Classification

- Access: Permissions and Profiles

- Volumetrics: data volumes

- Versioning: this includes API version

- Testing: test cases, expected results, and coverage

- Training content: help text and other training materials and videos

Autogenerated documentation

All the metadata listings can be accessed using the Metadata API to pull it from the Einstein 1 Platform, to build and maintain a metadata dictionary. BTW This includes the Data Cloud metadata. Some of the documentation listed comes in the Metadata API. Some can be accessed from an integration to Event Monitoring. Other documentation can be calculated based on the metadata and its related attributes. This gives a structure to be able to link to other documentation.

AI-generated documentation, in addition to descriptions

AI can draw a UPN process diagram based on a prompt that could be direct instructions, or the transcript of an interview. This is using the prompt and the AI’s knowledge of business operations. So the more specific the instructions, the better the diagram.

Writing good user stories that include acceptance criteria is boring and time-consuming. It is something that AI does really well and it is faster and more accurate than humans. You still need to validate the results but it is a huge productivity gain. Use stories can be generated from an activity box on a UPN diagram or from a text prompt. If you drive it off the UPN diagram it is just 2 clicks, because AI is picking up all the information from the diagram. If you are prompting AI from text, then you need to give it a lot of context. Therefore, this approach is more time-consuming.

Again, the results will need to be validated by a human in the loop, but the first draft in most cases is pretty good.

Human-generated documentation

Manually created documentation can be attached to metadata. This may have been generated during the business analysis and design phase of the project. It might be during development and deployment. Or it could be the development of end-user training content (guides, decks, video) and change management materials.

How to start documenting

This is where you roll your eyes. You have an org with 10,000 fields, 1,000 Apex Classes, and 500 automations. Sounds scary, tedious, impossible? And there is too much, and much of it is not used. The research shows that 50% of what is created is never used. 51% of custom objects are never populated. 41% of custom fields never have any data entered – ever. And of the custom fields that are used, within 3 months, 50% of them are no longer being filled out.

The good news is you don’t need to document your entire org, and you can take it a bite at a time. We have a simple approach that is practical, achievable, and helps you make measurable progress. It focuses on what is important to your org and the driver which is business transformation or implementing Data Cloud or AI.

Rather than finding a needle in the haystack, but getting it all out of the barn and checking every strand, let’s pull on the thread attached to the needle.

3 steps: WHAT > HOW > WHY

- HOW: How does this part of the Org/business work?

- WHAT: What did you change in Salesforce to support it?

- WHY: Why were changes made and where is the documentation that describes why and how you changed Salesforce?

Pick an area

First pick a capability, app or project area. Pick the area that has the greatest level of change. Documenting will make the biggest difference – impact analysis, even before you apply AI. You may want to pick an area to practice and prove the approach; an area that is low risk and low profile.

For each area do the HOW, WHAT, WHY.

HOW – does it work?

HOW is all about understanding how the business operates and is best described as a simple UPN process diagram. It is how the users use Salesforce to get their job done. It is how that automation or integration works behind the scenes. Mapping processes can feel daunting, especially when you start talking to IT teams who often use a complex modeling notation. For business process mapping, we recommend a simple notation that has been proven in 1,000s of projects over the last 20 years.

WHAT – metadata do you use in Salesforce?

Now you work your way through each process diagram step-by-step and ask the question for each activity step: “What in Salesforce do we use to deliver this step?” This could be an object, field, page layout, email template, flow, etc. You can link them to the process step.

Don’t be surprised when you find the same items are linked to a number of different process steps and are used by different departments. This is where documentation becomes a critical resource for impact analysis for any new changes. There is no “right and wrong” here, but decide on an approach and be consistent to help people leverage this. Some people link the highest levels of their process map to the objects involved. Then on the detailed lower level diagrams, process steps may have links to validation rules, specific triggers, or a detailed automated activity, that was created in automation.

WHY – did you do it that way?

And then for each metadata item that you attach, update the description field via the metadata dictionary, and link any existing documentation you have, to the metadata in the metadata dictionary.

Final word

Metadata documentation is now clearly critical and there is enough evidence to build a business case to convince senior management to allocate time to projects. Sadly, AI cannot solve the problem because it does not have enough context – the WHY. But, a simple process-led approach to document metadata means that you can make measurable progress. And alongside that, you can reengineer your implementation approach to build in more business analysis that will accelerate time to value, and ensure you are ready to implement Data Cloud + AI.

Sign up for

our newsletter

Subscribe to our newsletter to stay up-to-date with cutting-edge industry insights and timely product updates.

Ian Gotts

Founder & CEO20 minute read

Published: 17th May 2024