Why the ‘prompt’ is the key to unlocking AI success

Imagine you had a fleeting chance to interact with someone you deeply admire, whether that’s a renowned business leader like Marc Benioff, a music icon like Taylor Swift, a celebrated actor like Ryan Reynolds, or any other influential personality. The secret to truly benefiting from such a rare moment lies in posing the right questions.

Consider my experience: I began bass guitar lessons with an extraordinary teacher, a member of an all-women Led Zeppelin tribute band and a widely respected session musician. I told her, “My goal isn’t just to just improve my bass playing skills.” She initially seemed surprised. But her understanding dawned when I clarified, “I’m here to learn how you think, to understand your approach to playing the bass.” This shift in focus completely changed her teaching method. As a result, my learning and development have accelerated significantly.

When using Generative Pre-trained Transformers (GPT), we should be following this same principle. It’s all about the prompts you use, ensuring that the GPT’s responses are not only accurate but also relevant and tailored to the user’s needs. This article delves into the importance of well-designed prompts, the way you frame your questions and how this enhances AI’s performance across various applications.

The essence of crafting effective prompts

An effectively crafted prompt serves as a guide, leading the GPT to understand the command and respond in a manner that matches the user’s intent. With Large Language Models (LLM) like ChatGPT, the quality of your questions are crucial. It is far more than a Google search. The additional context that you provide as part of the prompt, domain knowledge and an understanding of how the specific model operates, all contribute to generating meaningful, contextually relevant responses.

The success of a GPT prompt hinges on two fundamental aspects: context and specificity. Context provides the necessary background information, setting the stage for the GPT to understand the scenario or the subject matter. Meanwhile, specificity directs the GPT’s focus on the approach, input and the format of the results required for the response. Together, these components form the backbone of effective prompt engineering.

The effectiveness of your prompts, hinge on their specificity and length. Detailed and extensive prompts typically yield better and more thorough responses.

Treating a prompt like unpredictable code

If you’ve written code then you know exactly what the answer is going to be. The compiler doesn’t change. When I write a prompt, I’m hitting a LLM that comes back with a result. That large language model could be evolving based on training, refinement and optimization. So the result will change over time – something that is called drift. Than puts even more emphasis on how it is managed.

If you’ve embedded a prompt in an app, or if it’s available as a shared template, it needs to be managed around the software development life cycle (SDLC). So the key steps are:

Initially, you’d plan and work out what outcomes you’re expecting. This goes beyond the result yielded from the prompt. Instead, it encompasses what that result is trying to achieve. For example, if the prompt is formulating an email based on a customer’s data, triggered by some action, then what action do you want the email to achieve?

Next, you would build the prompt. This is quite iterative. You write, you test. Simply reversing the position of two words in the prompt gives dramatically different results. What we’ve discovered is that the longer and more detailed the prompt, the better the answer. It is both better and quicker to write a tighter (and probably longer) prompt. If you put words IN CAPITALS the LLM takes them more seriously.

Writing prompts that get great results is a whole skill set in and of itself. The Elements.cloud team spent three days optimizing a prompt inside ElementsGPT that takes business process steps and automatically builds user stories with acceptance criteria. That time was worth spending because the results are off the charts.

Thereafter, testing. How do you sign off a test if each time you run the prompt, it returns a slightly different answer? Is automated testing even useful? We need to think a little bit more broadly about what testing means.

Let’s consider how we manage versions of prompts. We need a repository of prompts that are shareable assets, rather like email templates. We need to be able to share them, version them, and iterate them, to make them better and more valuable. Our Product Management team has built a series of prompt templates that automatically build our internal website, sharing details about forthcoming, new product features.

There are some new concepts that you wouldn’t find in a traditional SDLC. Are the prompts being used? Are the results what we expected? Are users taking the results and having to edit them? We need a mechanism for monitoring usage, tracking how much users are modifying the results, and a way to collect end-user feedback.

We also need to track drift. How much is that prompt’s result changing over time as the LLM evolves? If I look at it in a week’s time, how different is that prompt from the one that we built and tested today? What about the direction of drift; is it getting better or is it getting worse? As prompt engineers, we need to monitor the prompt results. The concept of monitoring something for drift is new. Relying on user feedback alone, isn’t enough.

When a prompt is high risk, we may need to monitor that weekly or daily. An example would be, a prompt that creates a result that has a material impact: an email with an offer sent to a customer, based on an action or value hitting a threshold. Contrastingly, an internal email would likely pose less of an issue; we would monitor that prompt monthly instead.

Finally, a prompt can have metadata dependencies if it is pulling data from systems to generate the result. If the development teams do not know that the field is being used by a prompt and it’s then edited, whilst it might not break the prompt, it will affect the result. That impact may not be noticed either.

In a practical scenario, the prompt uses an amount field to format an offer email. The amount field was in dollars, but now you’re moving to multi-currency. The amount field contains the value and there is a second field next with the currency symbol displayed in the page layout. The prompt only looks at the amount field. Suddenly, we’re putting Sterling in there, which is around 1.2 of a Dollar. AI doesn’t care. It’s just making decisions for you. It doesn’t care. It’s picking that number from the amount field.

Operationalizing prompt templates for efficiency

The use of prompt templates is a strategic approach in the realm of AI interactions. These templates are pre-designed prompts that serve as a starting point for common queries or tasks, streamlining the process of interacting with AI systems. This section explores the methodology behind creating and utilizing these templates, highlighting their role in enhancing efficiency and consistency in AI responses. By operationalizing prompt templates, organizations can ensure quicker and more standardized interactions, leading to better user experiences and more reliable outcomes.

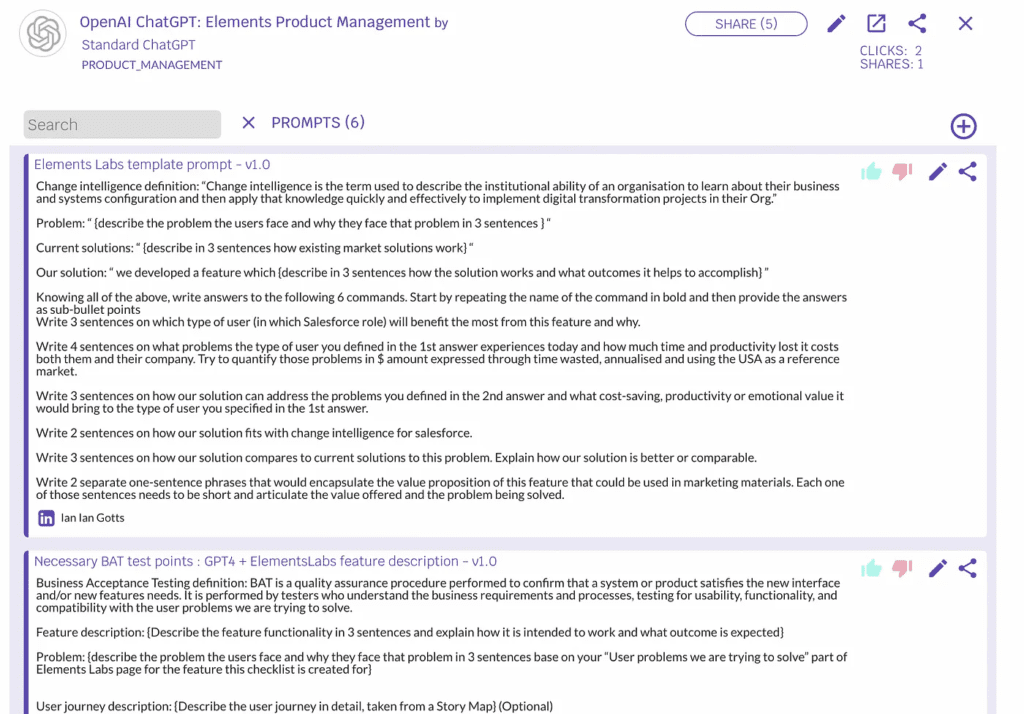

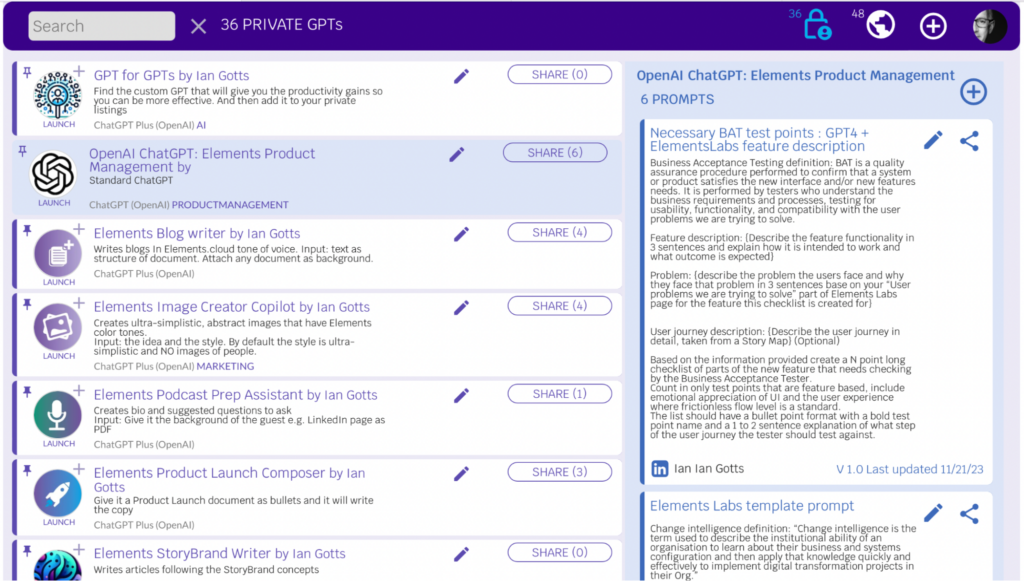

The Elements.cloud Product Management team, has developed prompt templates that are 300 to 400 words, which have been meticulously refined over the past six months. These in-depth prompts have markedly improved our team’s efficiency, leading to productivity gains of 20 to 50 times. They have now become a fundamental aspect of our everyday workflow. Additionally, these prompts include placeholders, within curly {} brackets, which are replaced with specific information before use, resulting in versatile prompt templates. There are a couple of examples in the image below.

Standard GPT, custom GPT, and apps using API

Understanding the distinction between standard and custom GPT models is crucial in the context of prompt design. Standard GPT, e.g. ChatGPT or Claude, requires prompts that provide the context and detailed instructions to steer them towards the desired responses. On the other hand, custom GPTs, offered by OpenAI, can be tailored to specific domains or tasks, providing better accuracy with less detailed prompts. This is because the background knowledge and instructions are part of the custom GPT.

GPT can also be embedded into an app, using APIs. The app uses its own data to format the prompt and provide the context. The result may be presented back in the UI, or may be used inside the app; controlled through the app’s security model and UI.

This section delves into the nuances of working with the different types of models, offering insights into how to best leverage each, according to the task in question.

Standard GPT

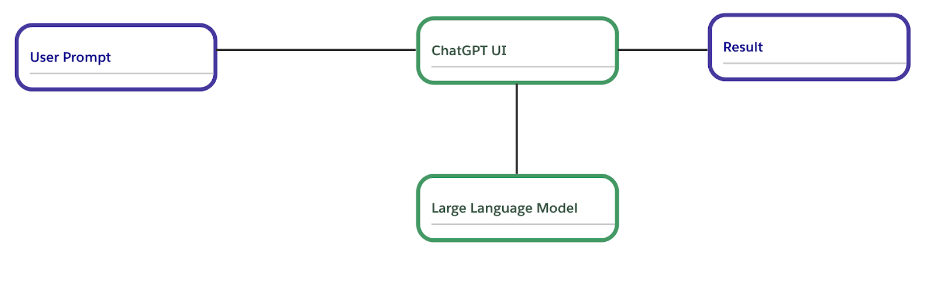

The diagram below shows the standard GPT architecture. The User enters a Prompt in the ChatGPT UI. The LLM returns a Result. The only data it can draw on is the LLM, or the single file that you attach with the prompt. Hence it is subject to all the biases of its training data. Note: these diagrams were drawn in the Salesforce Diagrams Architecture Standard using Elements.cloud.

Custom GPT

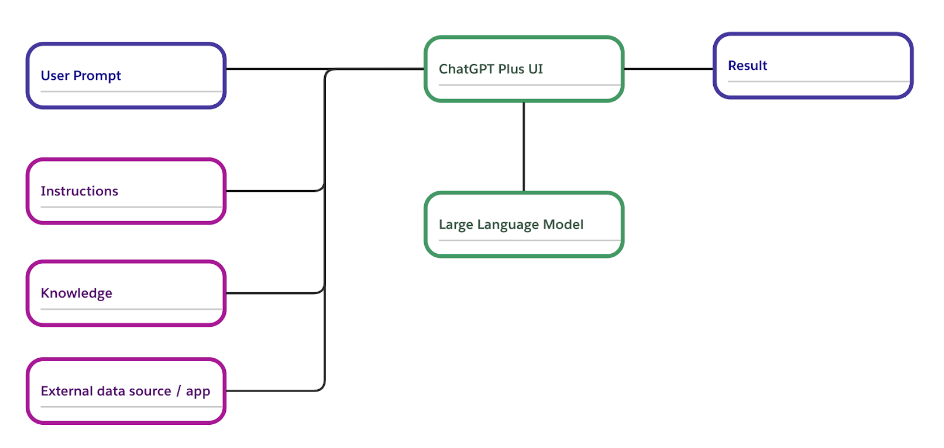

OpenAI has introduced Custom GPTs, which are specialized AI models that can be enhanced with specific instructions, including up to 10 knowledge files and API links to external sources. This development signifies that a significant portion of the information previously required in prompts can now be stored within these instructions. Consequently, you can combine your unique data with the Large Language Model (LLM), leading to substantially improved outcomes – as illustrated in the following diagram.

The UI is the same as standard ChatGPT. As the custom models use the instructions and knowledge in addition to the prompt, it means the prompt can be shorter. Access to these models requires a ChatGPT Plus account. Users of ChatGPT Plus, have the capability to create their own Custom GPTs, with the option to keep them private or share them publicly. Private models are accessible exclusively via a unique link, making them suitable for sharing sensitive or specialized information within a restricted group, such as team members.

In contrast, public models are available to a wider audience, offering content creators a platform to share their knowledge and tools more broadly. The effectiveness of these custom GPTs depends on various factors, including the creator’s expertise, the quality of instructions given, the relevance of the attached knowledge, and the efforts put into training and fine-tuning them. Generally, the most successful models are those with a clearly defined and narrow focus.

Here are a couple of examples:

- TDX submission guide (public): this tool assists in crafting your proposal for a presentation, guiding you through the submission form.

- Success story writer (private): converts a customer interview transcript into a formatted success story.

The success of these custom GPTs for users, largely hinges on the quality of the prompts used. Typically, the creator is best equipped to know which questions will yield the most useful responses. While a custom GPT can start with up to four brief initial prompts, achieving optimal results often requires more comprehensive and detailed prompts.

Be cautious when making your custom GPT model public. Someone can manipulate the GPT into divulging the instructions, listing the knowledge file names, and summarizing the contents of these files. Therefore, carefully consider what information you are willing to share publicly, including the names of your knowledge files. Additionally, be aware that anyone with access to the private link can view the model.

OpenAI has launched a GPT Store, enabling thousands of custom GPT creators to have their custom GPTs discovered and potentially monetized. Before you give up your day job expecting to make millions from a custom GPT, you need to realize that the barrier for entry to building custom GPTs, is low and the IP is difficult to defend. I don’t see a huge amount of money being made for everyone; it’s a volume game.

Because OpenAI does not allow you to put “Salesforce” in the name of the custom GPT is is impossible to search in the GPT store for customGPTs that are specific to Salesforce. So, we’ve created a free app to list Salesforce specific custom GPT. Also, it allows you to share them and store your prompt templates.

GPT embedded in an App

Salesforce and various ISVs, are integrating GPT technology into their applications. This integration allows the app to assist in structuring the prompt, customizing the instructions, and using its own internal app data to structure the prompt. The output from this process might be displayed within the app’s user interface (UI) or utilized internally within the app. How this is managed and accessed, is governed by the app’s own security protocols and user interface design.

Here are some examples:

- ElementsGPT can take the transcript of a discovery call, a screenshot of a process diagram from any app, or a hand-drawn process, and it will then design a process map. It’s not just drawing a diagram, but it is using its knowledge of processes, to design the diagram.

- Copado is providing AI-driven expert advice on how to use its DevOps platform, and get answers on conflict resolution, back promotions and release management.

10 ideas to write better prompt templates

- Focus on crafting prompt templates: work towards creating a prompt that can be reused as a template. Aim for a satisfactory result with your initial prompt that doesn’t require an extended back-and-forth dialogue. If a conversation does evolve, use it to refine and perfect your prompt template.

- Engage ChatGPT as a conversational partner: understand that ChatGPT is more than just an advanced search tool; it’s a conversational AI. Approach it as you would a discussion partner. Engage in an interactive dialogue, building on responses, clarifying points, and delving deeper into subjects.

- Establish clear context and provide content: The context is key. If you’re a business analyst for example, frame your prompt from that viewpoint. ChatGPT can then provide responses that are more relevant to your field. Then provide reference content, so that the GPT is not relying on the LLM for information, ensuring relevant, focused output.

- Be precise: Avoid vague questions to prevent generic answers. Be explicit about your needs, including specific details about your data, goals, and preferred methods or tools. This helps ChatGPT generate more relevant and useful responses.

- Define result format: clearly state how you want ChatGPT to present the results, whether it’s in text, code, images, or charts created by the Code Interpreter function.

- Adhere to standards: incorporating industry standards into your prompts ensures that the analysis and results are formatted correctly and professionally. Whether it’s user stories, UPN process diagrams, or other frameworks, referencing these standards can guide ChatGPT’s responses.

- Record and Improve: Keep track of the prompts you use and their effectiveness. Store and continually refine successful prompts, aiding in the development of your skills in prompt crafting.

- Make prompts operational: integrate your effective prompts into your team or organization’s daily processes. Share and refine them based on collective input and feedback.

- Split up complex tasks: the key is to break down a complex task into smaller, more manageable subtasks. The idea is to ask a question, use a critical part of that response to form your next question, and so on. It’s important to keep the context clear and not let ChatGPT guess which parts of your request are most important.

- Embrace experimentation: the AI field is constantly evolving. Be open to experimenting with different phrasing and structures in your prompts, observing how variations can yield diverse responses.

Bonus: USE CAPITALS to emphasize important instructions in the prompt. For example: “Please summarize the attached transcript. ONLY USE information from the transcript.”

Managing prompt templates

Having a systematic approach to organizing prompt templates for ChatGPT and similar models is crucial. One way to manage these prompts within your organization is by creating custom objects in Salesforce. However, it’s important to remember that this isn’t a replacement for Einstein Prompt Studio, which is specifically designed for handling prompt templates in Salesforce.

As the learning curve is ongoing, we encourage not only the creation and sharing of custom GPTs but also the prompts that power them. Additionally, there’s a growing trend to save private prompts as templates. We created Salesforce-specific customGPT directory to aid the ecosystem in finding relevant GPTs. Recognizing the growing need for prompt management, we’ve significantly broadened its capabilities to include tracking of prompt templates.

The final word

I know that feels like a lot, but actually we need to move beyond thinking a prompt is ‘like Google search but cooler.’ We need to put some guardrails around this: thinking of a prompt as reusable code that needs to be managed around a software development life cycle. Without putting thought into how we manage prompts, it can quickly turn from a cooler version of a Google search, into something way scarier.

Sign up for

our newsletter

Subscribe to our newsletter to stay up-to-date with cutting-edge industry insights and timely product updates.

Ian Gotts

Founder & CEO14 minute read

Published: 12th January 2024