Understanding the magic of GPT

Written by Ian Gotts

AI + Data + CRM

Salesforce’s new marketing message is AI + Data + CRM. It all adds up to customer magic.

At the heart of this magic is Generative AI or GPT, not ChatGPT.

BTW GPT is not an abbreviation of ChatGPT. GPT (Generative Pre-Trained Transformer) is a technology that uses an LLM (Large Language Model) to produce natural language discussions. ChatGPT is just one of the interfaces into OpenAI’s GPT/LLM. A detailed explanation is in this article. https://elements.cloud/blog/chatgpt-just-hints-at-the-power-of-generative-ai/

As a customer of an organization running Salesforce, such as one of the 500 million F1 fans, you can simply sit back and be amazed by the magic. But as a Salesforce professional involved in building, maintaining, and managing Salesforce, it is important you have a working knowledge of what is going on behind the scenes. So if we are going to be able to use GPT effectively we need to understand how it works and its limitations.

Before we launch in, here is a fantastic video of the famous magician Penn, baffling Rebel Wilson with a rope trick, but making it very clear to the audience how he is doing it.

We need to be the audience, not Rebel Wilson.

How GPT / LLMs work

TL;DR.

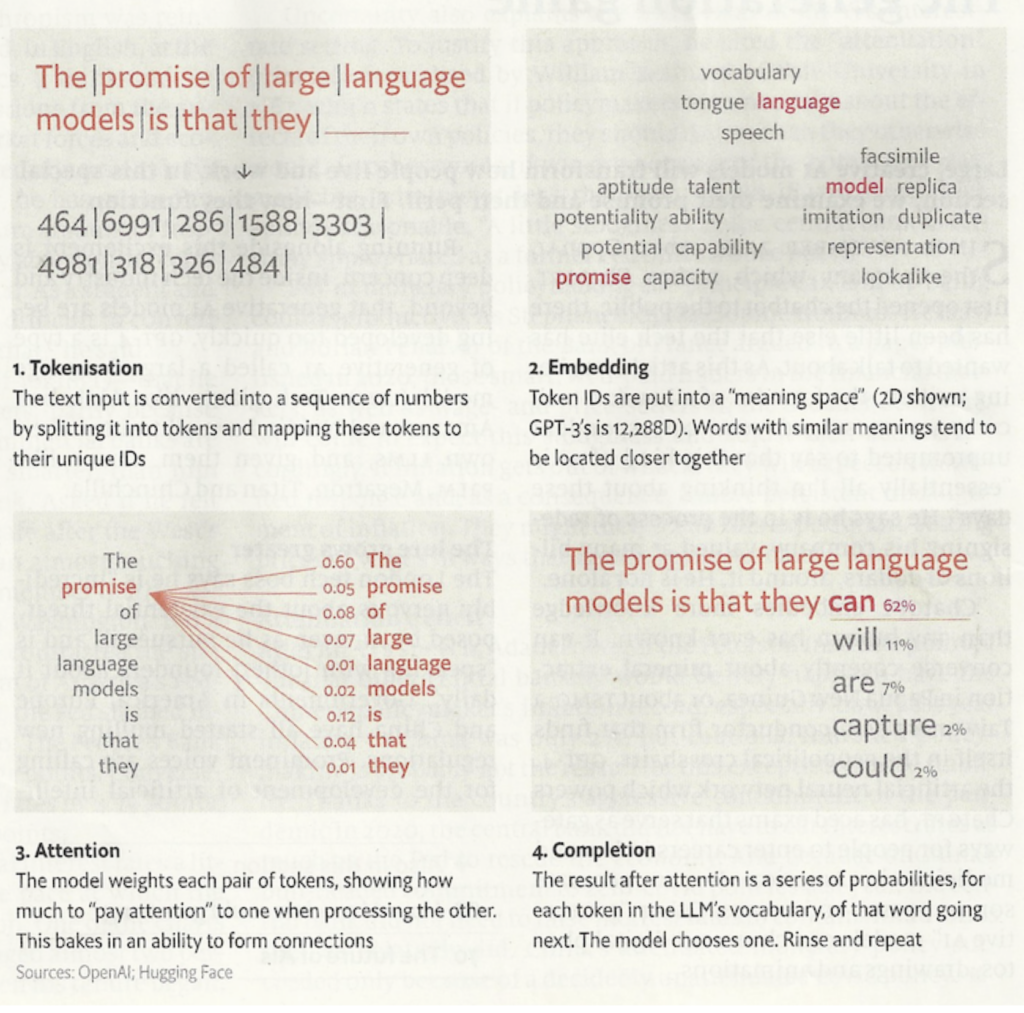

GPT is able to predict the next word in a sentence based on probabilities (and how creative you’ve asked it to be). It requires a massive amount of trained data, algorithms capable of learning, and raw computing power.

Cliff Notes

It is a simple step-by-step process to predict each word. Here is a great graphic that shows how it goes about it. If you want to get into the nitty gritty detail, there is an Economist article called “The generation game” from 22 April 2023, which is where this graphic came from

Deep Dive

An even longer read is the OpenAI White Paper from 2020 called “Language Models Few Shot-Learners”

Chat GPT is a limited interface into GPT

ChatGPT is amazing

ChatGPT is just one of many applications that are conversational interfaces on top of OpenAI GPT. The free version is GPT3.5 and the paid version for a limited number of users is GPT-4. GPT is a term for the engine that enables the conversational style by accessing an LLM (Large Language Model). The LLM is the dataset that has been trained so that GPT can deliver natural language results. So when people talk about GPT they mean both the engine and its related LLM.

The first GPT is over 5 years old. GPT-3 was trained on 570 GB of data from snapshots of the internet from 2016-2021 at an estimated training cost of $4.6m and it used 1.3 gigawatt-hours of electricity which is enough to power 121 homes in America for a year. Training GPT-4 was estimated to be $100m, which gives you some indication of the step change in its capabilities. Advances in GPT, the use cases, and supporting technologies are changing almost weekly.

ChatGPT limitations

ChatGPT’s fact-based knowledge is limited by the training of the LLM which was from 2016 to Sept 2021. So it has no knowledge of EinsteinGPT, or EOL for Process Builder Workflow, or Lizzo’s About Damn Time. And the second issue is the prompt and result conversation stream has a maximum of 4k tokens, which is about 16k characters. This is 150-250 sentences.

This is perfectly workable to use ChatGPT directly to write an email, summarize a webpage, write code, or a blog post. Provided of course, you are not relying on information after Sept 2021.

Building a ChatGPT clone

It also is relatively easy to build a ChatGPT clone using the APIs. This enables you to use your interface to help structure the prompt for the user or do something useful with the result, such as paste to the clipboard or store in a database. It took me less than 30 mins to connect OpenAI to an app I built to manage our cover band. It orders songs in a setlist based on the audience and suggests what to say between songs. But not for Lizzo’s About Damn Time!! I see a lot of apps being launched at are at this level.

Breaking the limitations

Future iterations of ChatGPT will increase these limits, but to do some really innovative work involving huge volumes of data such as suggesting how to implement changes to Salesforce based on requirements, the org configuration, and the Well-Architected Framework, we need to use some alternative approaches.

Architecting an advanced GPT app

But Generative AI (often referred to as GPT) has the potential to parse huge volumes of contextual data and not rely on the facts that OpenAI’s LLM has been taught. But we need to be able to get over the token limitation.

GPT technologies

There are technologies that are able to apply GPT to large data sets; semantic databases like PineCone and agent frameworks like AutoGPT.

- Semantic databases enable large text datasets to be uploaded and stored as numbers. Then GPT can make queries to pull back a small, relevant set of data to solve the problem it has been asked.

- Agents can be developed for specific use cases. For example a “User Story Agent” or a “Solution Agent”. They are told the resources they can access (app queries via APIs, semantic databases, LLMs etc). The agent does the following, using GPT as a helper

- It takes the prompt and asks GPT what resources it needs to use to solve it

- It asks GPT to create a task list of the actions to take

- For each task, it gets GPT to provide the queries for the resources

- It executes the tasks to get results by using GPT.

- It uses GPT to assess if the result solves the question, and if it doesn’t it goes back to GPT to create a different approach

- It collates the results and asks GPT to structure them as the result.

Pulling the pieces together

To make this work, you need to maintain the semantic databases and tune it for the type of problems you are trying to solve. For example, if it is being used to query the org configuration, it needs to be updated every time you make changes to the org metadata or documentation. But you also need to consider what org config information you are going to store. Too little and the results are meaningless. Structured the wrong way and the volume of data returned from the query overwhelms GPT.

You need to wrap the agent with an app that has a UI to capture the prompt and potentially give it context by querying other data so it does not need to be typed in. For example, if you are asking it to write a user story, and it is being launched from an activity box on a UPN process map, then there is a lot of context that it already has; process activity description, resources, inputs and outputs, attached notes.

Finally, you need the app to display the result and do something with it. In my user story example, it will create a user story with acceptance criteria for every resource on each activity box within the scope. It will store them, and also attach them to the related activity box.

Taking this example a little further. The app then takes each user story, accesses the semantic database with your org configuration, and the semantic database with the Salesforce Well-Architected Framework, and based on this suggests the best way to implement the changes.

JOIN THE WEBINAR

This is not theory or demo ware. You will see it in our Prod Org running against real org data and producing staggering results. Join the webinar to see it in action, but also understand the implications for every role in the Salesforce ecosystem

Sign up for

our newsletter

Subscribe to our newsletter to stay up-to-date with cutting-edge industry insights and timely product updates.

Ian Gotts

Founder & CEO6 minute read

Published: 18th May 2023