GPT is not an abbreviation of ChatGPT

Written by Ian Gotts

We need to be careful that we don’t use ChatGPT and GenerativeAI (GPT) interchangeably, or see the potential of Generative AI through our experiences of ChatGPT. And, at the moment I am seeing lots of examples where this is happening around the Salesforce ecosystem in blog posts, LinkedIn posts and training that is being offered.

GPT stands for (Generative Pre-Trained Transformer) and is a generic term. ChatGPT is just one of many interfaces into OpenAI’s text GPT/LLM. But it does not expose the full potential of GPT. I know it is confusing as ChatGPT has GPT in the name so suggests that ChatGPT can harness the full power of GPT. But it doesn’t.

The risk is that someone is experienced in using ChatGPT, has completed one of the many training courses that are being marketed, and then believes that they have mastered GPT. This is not true. It is rather like passing the Salesforce Admin Cert and thinking you know everything about implementing Salesforce.

ChatGPT – AI stands for Another Interface

Car analogy

We all love analogies. The Porsche GT3 is a staggering change for people who have only driven a Honda Civic. It is so fast it is amazing it is allowed on the roads. But the Porsche GT3 is nothing compared with an F1 car. The Porsche is ChatGPT. The F1 car is GPT. You could complete Porsche’s advanced driving course, but still wouldn’t even be able to get an F1 off the start line without stalling it. And one look at the steering wheel would have you confused. Below is the steering wheel from a Ferrari F1. Can you find the launch control, to get you off the start line?

Honda Civic – nothing

Porsche GT3 = ChatGPT

F1 = GPT

We need to change the narrative and broaden the ecosystem’s understanding of the potential of GPT.

ChatGPT – impressive, limited, but just the start

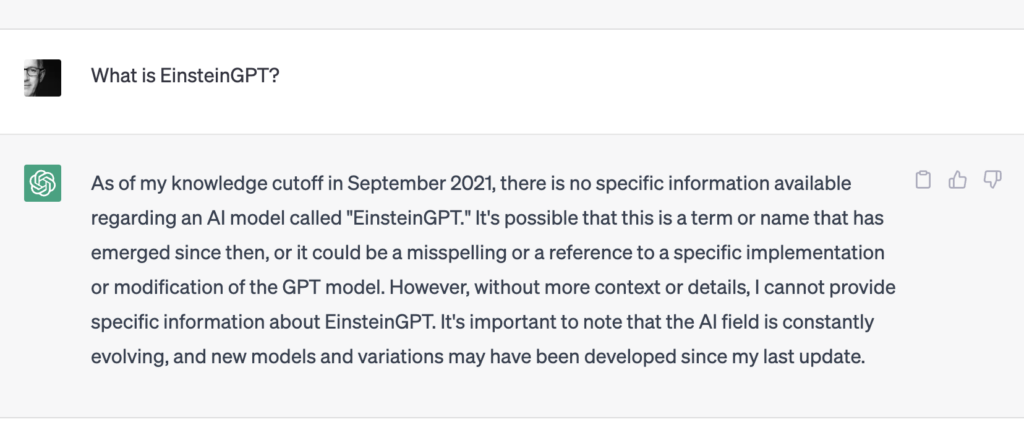

The OpenAI APIs are easy to use. Anyone can build a ChatGPT clone. It took me just 30 mins to connect OpenAI to an app I have built to manage our cover band- Jane Blonde and the Goldfingers. The band app has songs and setlists of songs for each gig. We connected OpenAI so that it suggests the order of songs in the setlist based on the audience of the gig. We play covers of classic dance songs, so it knows most of them as they are pre Sept 2021 (this date is important). And it can also suggest what to say between songs. Sorry Lizzo, but About Damn Time was released in 2022 so ChatGPT doesn’t know about it, despite it winning a Grammy!!

ChatGPT’s fact-based knowledge is limited by the training of the LLM which was from 2016 to Sept 2021. So it has no knowledge of EinsteinGPT, or EOL for Process Builder Workflow, or Lizzo’s About Damn Time…

So clearly lack of knowledge is a limitation. If we want more current answers, we need to give it more information. Which leads to the second limitation of ChatGPT. The maximum length of prompt, response and the conversation is 4k tokens. A token is approximately 4 characters. So 16k characters is about the length of this blog. So this is clearly a huge limitation for some use cases, such as Org Discovery.

The potential of GPT (Generative AI)

Generative AI (often referred to as GPT) has the potential to parse huge volumes of contextual data and not rely on the facts that OpenAI’s LLM has been taught. But we need to be able to get over the 4k token limitation. There are technologies that are able to apply GPT to large data sets; semantic databases like PineCone and agent frameworks like AutoGPT.

- Semantic database enables large datasets to be uploaded and subsets provided to OpenAI as vectors, based on the queries.

- Agents can be developed for specific use cases. They are told the resources they can access (app queries via APIs, semantic databases, LLMs etc). You design an interface layer that takes the prompt, and using OpenAI, decides which agent to pass it to. The agent, using OpenAI, looks at the prompt and build a list of tasks to get the answer from the resources available to it, executes the tasks, gathers the data and presents it to OpenAI to format a reply in a natural language. Yes – you read that right……

What if you could….

Using this architecture a whole new world of possibilities opens up to leverage the analytics power of GPT working on large sets of data. For example:

- What if you knew every nuance of your Salesforce org and could “talk to your org”?

- What if you could ask a question of the Salesforce Well-Architected framework?

- How much easier would it be to have a conversation with the latest Release Note?

- What if you could write perfect user stories in one click based on your requirements, process documentation and your org configuration?

- What if then it could then instantly figure out the perfect solution, tailored to your org and in line with Well-Architected Framework?

- How much better would your documentation of metadata changes be if you could add a couple of bullet points and GPT did the rest?

This sounds like science fiction, or something that might be delivered in the next 2-3 years. Not true.

We have it in production right now: called ElementsGPT.

You ain’t seen nothing yet

The GPT platforms and tooling are evolving quickly, so this is just the start. This is like the first iPhone launch, or the Netscape browser wars at the emergence of the internet.

Jason Wei, a researcher at OpenAI has identified 137 emergent capabilities. They are already in the LLM training data, but they do not emerge until a certain LLM size threshold has been reached. For example, writing “gender-inclusive sentences in German” was hit and miss until the LLM hit a certain size. And it can be only a little larger, and the capability pops out. GPT-3.5 failed the American Uniform Bar Exam, but GPT-4 aced it. These emergent capabilities hint at the untapped potential. Then layer on top of that new tooling frameworks that enable applications to tap into the power more easily.

Rest assured, the Elements AI team is tapped into what is evolving, and are ready to exploit new technologies but only when they are ready for prime time. It is a delicate balance. Which is why, only now, is ElementsGPT in production in a closed pilot.

The potential of Generative AI / GPT is both an empowering and scary time. When the clock is reset to zero, it levels the playing field for everyone. Those who understand how to use the tooling will emerge as winners. So spend time now understanding how to exploit ChatGPT, but don’t be fooled into thinking that you are tapping into the full power of GPT. We are all on a journey of learning at F1 speed.

Final word

I’ll leave you with a quote from Adrian King, Elements CTO. He posted in our company Slack that the Elements GPT app, was in production and what it could do. And one of the execs replied, “This is cool”. Adrian’s reply says it all:

It is actually way more than cool. It is profound. This is where AI is going to help you design your changes based on the intelligence you have captured in Elements.

Listen to the SalesforceBen webinar to see the future

Sign up for

our newsletter

Subscribe to our newsletter to stay up-to-date with cutting-edge industry insights and timely product updates.

Ian Gotts

Founder & CEO6 minute read

Published: 14th May 2023